We already know that AI strategy has dominated headlines over the last year—but have you considered incorporating existing AI tools into your software?

With OpenAI, Google, and Amazon’s API tools, you can now incorporate AI into software applications without spending valuable time and resources developing AI solutions from the ground up.

Although these APIs can accomplish similar goals, each one has different use cases, levels of advancement, and limitations to consider.

As we introduce these top three tools, keep in mind that you’ll need to take your specific business processes and goals into account when choosing the right platform for your needs.

AI Glossary: Breaking Down Key Terms

As you explore the available AI APIs, establishing a foundational understanding of AI terms can be useful. That way, you can understand all aspects of each tool and how they can best help you reach your goals.

AI (Artificial Intelligence)

AI is any implementation of machine learning to accomplish a task.

It can take the form of big data processing, weather pattern analysis, protein folding, or anything with iterative and predictable patterns.

In general, this term is vague, making it easy to cross into “buzzword” territory.

Generative AI

Generative AI is an emerging field that leverages a model to “generate,” rather than analyze, content in the form of text, music, pictures, video, and more.

ChatGPT, Bard, and Bedrock are all examples of generative AI tools.

Model

The combined training, or “brain,” that plugs into an AI tool.

Models function as a database of sorts, representing the way AI tools continually learn and train to better match user requests.

Web applications are built to interact with these models via an API.

Large Language Model (LLM)

An LLM is a machine learning model that consumes massive amounts of text and image data so it can interpret human language and create corresponding responses.

GPT-4, PaLM, LLaMA, and Dall-E are examples of large language models.

LLM API

The LLM API is the direct interface with an LLM hosted by an external source, such as OpenAI’s API and Vertex AI.

LLM Web Apps/Tools (ChatGPT, Bard, etc.)

LLM applications are the tools you are familiar with, including ChatGPT and Bard.

Their front-end interfaces draw on specific learning models, allowing for text interaction between end users and the LLM.

AI Prompts

AI prompts are the initial or follow-up instructions given to an AI tool, shaping how it responds.

With more specific prompts, you’ll receive better answers that are more likely to align with your expectations.

AI prompts drive how the AI tool references its model and training to provide answers.

Examples of prompts are “How do I clean a toaster?” or “What are five titles for an article about AI?”

Prompts can also include detailed instructions, like “Respond in a friendly and casual tone” or “Respond in 200 words or less.”

With any generative AI, the input will heavily affect the output.

AI Training/Fine-Tuning

AI training signifies the adjustment of the LLM with additional data or “weights” to provide further focus.

Training can be powerful, iterative, and computationally expensive. It includes a labor-intensive data pruning component.

AI Tokens

AI tokens are the “chunks” of knowledge taken from a model to construct a response, piece by piece.

Together, this web of tokens forms the core function of the model. The more knowledge required for a response, the more intense the computation.

For language models, a token is typically a single word.

In the same way that we don’t think in terms of individual letters or colors, an LLM thinks more broadly when constructing a sentence or image.

What are AI APIs, and How Do They Differ from AI Web Applications?

An API bridges the gap between two systems, allowing them to share information in real time.

From a technical standpoint, an AI API gives direct access to the model, or the “brain,” behind a web application like ChatGPT or Bard.

Remember that models are extremely complex and valuable, with a high degree of general knowledge.

Although the AI API will have slightly different rules and restrictions than the web app version, the technology, or model, behind them is the same.

Once you choose a platform, you can use its API to interact with a private instance of the model. In other words, you’re basically “renting the brain” of the application and plugging it into anything you choose, whether you’re using a chatbot, trip planner, or another system.

Using an AI API is similar to handing an assignment to an intern via a combination of code, efficiency shortcuts, and natural language.

In the long run, it can save your team time, energy, and resources while giving them access to a wealth of knowledge.

However, in the same way you’d double-check an intern’s work for accuracy, you’ll want to confirm the validity of any AI-generated outputs.

What are the Most Popular AI API Tools in 2024?

While numerous companies have created AI tools, we’ve found that the following platforms are the most popular and best-suited to integrate with common business applications.

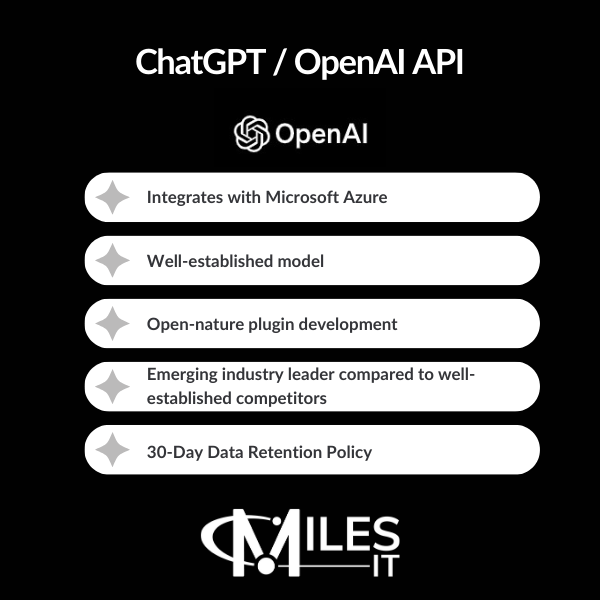

OpenAI’s ChatGPT API

OpenAI’s API is a more mature product than competitors and is well-known for its robustness. Built upon the powerful GPT architecture, it has clear data privacy practices, maintaining that no customer data is used to improve its models by default.

Pricing is straightforward, based on tokens with costs dependent on the specific model.

However, while it has impressive capabilities, the price can easily reflect that depending on your use cases.

ChatGPT is the mid-to-premium option with direct integration into Microsoft Azure.

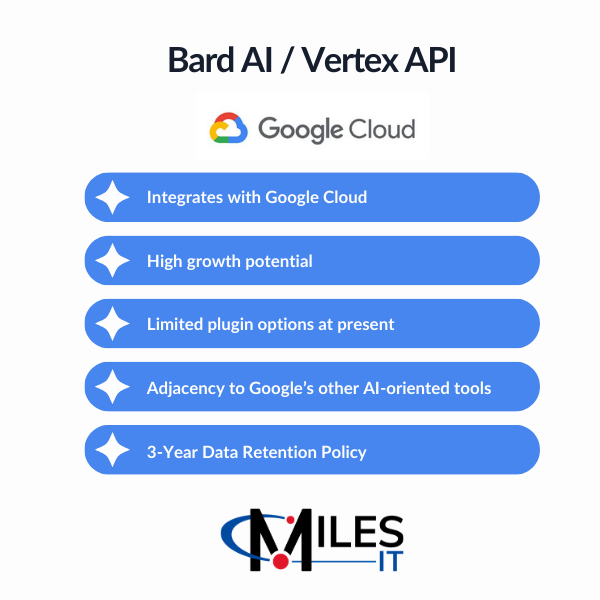

Google’s Vertex AI (Bard)

Google’s Vertex AI, built on the PaLM2, is a strong competitor to ChatGPT and constitutes the technology behind their web app, Bard.

Bard offers similar functionalities to OpenAI, but leans more heavily into the Google Cloud ecosystem.

Google’s data privacy policy states a subset of conversations are reviewable and kept for up to three years, though personal identifiers are removed.

In terms of pricing, the cost model is based on characters rather than tokens and is comparable to mid-level OpenAI models.

In general, the platform is less mature, with limitations in terms of third-party tools and output quality.

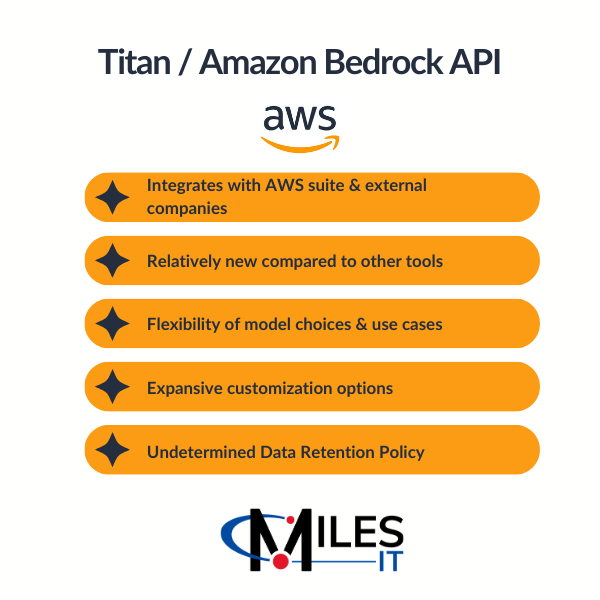

Amazon’s Bedrock

Amazon’s offering, Bedrock, is in the earliest phase compared to ChatGPT and Bard. It was initially released for general availability in September 2023, making it harder to fully evaluate in comparison.

Amazon notes that they don’t use customer data for training their “Titan” model and that they do not retain any of your data in service logs.

Their pricing structure includes various options depending on your usage and selected model.

Bedrock’s ability to integrate with other AWS tools and third-party models is a major strength, providing considerable versatility.

Comparing LLM Natural Language Tools

Wondering which AI API is right for your needs? The following comparison focuses primarily on each API and the companies behind the popular AI web applications.

ChatGPT/OpenAI/Microsoft Azure

Overview

ChatGPT, a conversational LLM developed by OpenAI, uses natural language processing through advanced GPT-3.5 and GPT-4 models. It can be used for chatbots, virtual assistants, and plugin support.

OpenAI prioritizes data privacy and security, adhering to a strict 30-day data retention policy with user consent required for data training.

The pricing structure includes a monthly web app subscription and token-based pricing for the API, with cost-effectiveness varying by use case.

The company acknowledges the limitations of the models, emphasizing the need for a balance between practicality and precision.

OpenAI’s hosting minimizes technical requirements for customers, and rate limits are applied at the account level. While the higher-cost GPT-4 offers better results, there are high-value, high-performance options as well. They also make frequent updates to keep customers at the technological forefront.

Despite a relatively weaker reputation in comparison to giants like Google and Amazon, OpenAI is still regarded as a field pioneer.

Integration options include Microsoft Azure and enhance the levels of customization and ease of use.

Use Cases for ChatGPT

ChatGPT can be used to assist or fully handle a variety of tasks, including:

- Chatbots

- Virtual Assistants

- Generative Advertising and Communication Creation

- Translation

- Creative Content

- Tutoring/Education

- Text Summary

- Proofreading and Revision

- Code Checking and Conceptualization

- Any combination of the above

Another important note is that the web application supports third-party “plugins,” which can be built into the API handling process. This integration allows for tasks like ordering groceries with Instacart and doing complex math via WolframAlpha.

Flexibility is also a benefit, with the option to use more or less complex models for additional control over expenditure and speed.

Despite being primarily focused on their language model, other OpenAI services include:

- Whisper, a versatile speech recognition model that transcribes, identifies, and translates multiple spoken languages

- DALL·E 3, which creates original, realistic images and art from a text description and combines concepts, attributes, and styles

Azure AI/Machine Learning tools are also available.

Data Privacy and Security

When collecting and using personal information, OpenAI details a number of ways they leverage user data (email, address, account information, IP address, and more) to operate their business.

Today, common security concerns pertain to data submitted to the language model and how it is used to train the AI models.

OpenAI follows a very strict 30-day data retention policy at a minimum, to scan inputs for Terms of Service (ToS) violations in all use cases.

The OpenAI API requires an opt-in for any data to be utilized in training the OpenAI language models.

The ChatGPT Web Application has the ability to create “conversations,” which are self-contained, isolated sessions. These sessions pose two security risks.

One, any data you share through ChatGPT will be selectively used to improve the ChatGPT service offering and models.

In addition to the 30-day data retention, opting into “Conversations” also opts you into “teaching” the model.

If you opt out of storing conversations or submit an “opt-out” form, none of your data will be retained or used outside of the 30-day ToS retention policy.

Two, if an unauthorized user accesses your OpenAI account, they can see all of your conversations within the ChatGPT application.

This concern prompted OpenAI’s initial privacy warning to avoid sharing sensitive data through ChatGPT. Unlike the 30-day data retention, the user is responsible for the strength of this security layer.

In all scenarios, a major data breach of OpenAI’s 30-day Retention Database would potentially expose any data held there.

We recommend not sharing proprietary or sensitive information through any AI tool to protect your business and personal data.

Pricing

OpenAI leverages two separate payment models, depending on the usage.

Web Application: Monthly subscription subject to change. $20/mo for limited access to GPT-4, or free access to GPT-3.5.

API: Multiple models, each with different capabilities and price points. Prices are per 1,000 tokens. You can think of tokens as pieces of words, where 1,000 tokens are about 750 words. This paragraph is 35 tokens.

Limitations

Currently, OpenAI is unable to guarantee any answers its LLMs provide. This is true of each of the subsequent tools as well.

Just like with other information on the internet, it’s important to verify responses with credible sources.

ChatGPT’s primary limitation is that you aren’t automatically pulled into any cloud ecosystem unless you use Azure.

Integration and Customization

ChatGPT is quickly becoming the industry standard, so there is already a vast availability of plugin support and third-party development approaches/documentation compared to other options.

From a business perspective, the main benefit is the ability to use this tool through the Microsoft Azure service.

Bard AI / Vertex / Google

Overview

Bard AI is a web application based on Google’s PaLM2 model, providing a unified interface for AI services through Vertex AI and other Google Cloud tools. While its plugin options are limited, the technology offers potential for growth.

Google places high importance on data privacy and security, ensuring anonymized submissions and separate data storage from Google accounts. However, data retention lasts up to three years.

Vertex AI’s pricing uses a character-based approach, with costs varying according to the type of processing.

Google’s offering operates on a different dataset and technology than “GPT,” potentially influencing the type of generated results. Still, integration with Google Cloud could offer numerous AI-oriented tools in the future.

While the quality of Bard/PaLM2’s output is reportedly lower, Google’s reputation promises long-term accountability and user privacy.

Though its documentation might not be as user-friendly as OpenAI’s, the extensive information and integrated Google Cloud support ensure sufficient assistance.

Use Cases for Bard

Google’s options for generative large language model interfaces/training are similar in intent to the other offerings.

Vertex PaLM2 API on Google Cloud is basically the Google equivalent of the OpenAI API.

It is a “unified” generative LLM interface that combines training, inputs, and outputs.

Like the OpenAI API, PaLM2 creates text or code-based answers and can understand the larger context of a conversation.

It understands, categorizes, condenses, and separates information depending on user requests and inputs.

One recent development is Google’s Gemini update in December 2023, its most significant upgrade since Bard’s initial release.

Gemini can support multimodal prompts, and Google suggests that it will exceed the capabilities of GPT-3.5. The company states that Gemini can understand a wide variety of information types and incorporate powerful reasoning abilities into its responses.

Although developers can currently use the Gemini API, it remains in preview mode, with limited support and features as the rollout continues.

Data Privacy and Security

When it comes to security and privacy concerns, Google shared in 2020 that they do not train foundation models with user data.

However, Bard’s privacy/data policy states that they do use user submissions to help improve Bard’s safety and overcome LLM issues.

Google notes that “conversations are reviewable by trained reviewers and kept for up to three years, separately from your Google Account.”

Though Google utilizes automation to separate potentially identifiable information from submissions, it’s best not to share any proprietary, sensitive, or identifying data in Bard conversations or any AI tool.

Pricing

Vertex AI takes a price-per-character approach rather than a token approach. This structure achieves greater clarity while reducing accuracy, since LLM generative models do not process by characters.

Remember that the quality of the output matters. A repeated attempt to get a proper response (and AI often takes repeated attempts) comes at full price.

Better models are more expensive but will also require fewer attempts.

Limitations

Upon its initial release, Bard had a weaker foundation than the GPT-3.5 model popularized by ChatGPT. There are also reports of increased failures to deliver information via the API, versus even the Bard web application, because Bard is able to perform Google searches for anything it does not know.

The API doesn’t include this functionality or a clear way to implement it.

With the recent release of Gemini, we’ll have to continue monitoring Bard’s capabilities and how they may evolve as a result.

Integration and Customization

The primary benefit of using the Google Cloud Vertex PaLM2 Generative AI API is the adjacency to its other available AI-oriented tools.

These tools include Google Maps pathfinding and big data analysis. In September 2023, Google released updates that allow Bard to integrate with other Google Workspace services, like Gmail, Docs, Maps, YouTube, and more.

These may likely form the basis of a very proprietary “app store” in the future, providing plugin-style functionality similar to OpenAI’s.

However, the main difference between OpenAI and Google’s tools is the open nature of OpenAI’s plugin development, which already has thousands of plugins from various sources.

Titan / Amazon Bedrock

Overview

Based on the proprietary “Titan Text” model, Amazon Bedrock serves as a medium between several AI models.

It offers versatility in functions like text generation, chatbots, search, summarization, image generation, and personalization.

Bedrock integrates various generative AI tools and other AWS offerings, allowing users to choose foundation models that suit their needs.

This platform was made generally available in September 2023, so it is still relatively new compared to OpenAI and Google’s tools.

Amazon notes that no customer data is used to train their underlying models, but data retention periods still need clarification.

However, Bedrock’s integration with the AWS suite and external companies’ proprietary models offer expansive options for customization.

While Amazon is a reputable company, its standing in the AI domain might be less robust, given the early stage of its generative AI offerings. As of now, documentation and support may be limited.

Use Cases for Titan

Like OpenAI and Google’s offerings, Titan has a variety of use cases and can be applied to many scenarios.

- Text generation: Create new pieces of original content, such as short stories, essays, social media posts, and webpage copy

- Chatbots: Build conversational interfaces such as chatbots and virtual assistants to enhance the user experience for your customers

- Search: Search, find, and synthesize information to answer questions from a large corpus of data

- Text summarization: Get a summary of textual content, such as articles, blog posts, books, and documents, without having to read the full content

- Image generation: Create realistic and artistic images of various subjects, environments, and scenes from language prompts

- Personalization: Help customers find what they’re looking for with more relevant and contextual product recommendations than word matching

With these new offerings, several other generative AI tools exist for training/tuning, creating, and utilizing generative AI models.

Plus, Amazon has a suite of other AI-imbued tools, such as Rekognition and Lex, through AWS offerings.

Data Privacy and Security

Amazon notes that users have complete control over the data they submit when modifying foundation models. Still, it’s difficult to find specific data retention, security, or privacy policy content for their Bedrock/Titan Generative AI Foundational models.

However, the foundation models are not trained with user data, and Amazon states that Bedrock is compliant with standards including ISO, SOC, CSA STAR Level 2, and more.

Still, like with the other offerings, it’s best to avoid sharing potentially identifying or sensitive data through the tool.

Pricing

Bedrock offers several pricing structures depending on your needs: on-demand & batch pricing and provisioned throughput pricing.

With On-Demand & Batch pricing, users pay as they go and utilize a token approach similar to OpenAI.

Provisioned Throughput pricing allows users to purchase tokens per hour for their specific application needs. This hourly structure requires users to commit to 1 or 6 months of use.

Limitations

Compared to ChatGPT and Google Bard, Titan is still relatively new.

Users mention that Bedrock isn’t always intuitive to use, and some level of learning is required.

However, users also note the flexibility of the service because of the variety of available models.

Integration and customization

Bedrock is a suitable choice for AWS users because it integrates with other AWS suite products. Another interesting feature is that Amazon Bedrock can be integrated with external companies.

As other companies build their own proprietary models, Amazon can integrate with their tools, allowing you to plug entire third-party models (such as Stability AI’s Stable Diffusion, Jurassic-2’s multilingual training model, Anthropic’s “Claude” chat model, etc.) into the service.

Which AI API should you use?

If you’re looking to integrate existing AI functionality into your software, you should take your specific needs and current technologies into account.

Although each tool’s integration with popular platforms like Microsoft, Google, and AWS may sway your decision, it’s also important to keep functionality, flexibility, and limitations in mind, along with security and data privacy concerns.

All of the tools contain the ability to fine-tune the models so they can more closely align with your needs. In addition, each company has noted that they do not use customer data for training foundational models, although their data retention and security policies differ.

In general, OpenAI is an excellent choice if you want to use well-established models and are interested in open-nature plugin development. It also includes thorough documentation. However, OpenAI doesn’t automatically pull you into any cloud ecosystem unless you use Azure.

Bard/Vertex AI makes sense if you’re looking to tap into the power of the Google Cloud environment. While it may reportedly have lower-quality responses right now, without considering the Gemini update, Google has a strong reputation and states their commitment to accountability and privacy.

Finally, Titan/Amazon Bedrock might be the best choice if you want more flexibility with your choice of models and customizations and already utilize the AWS suite of services. However, remember that this offering is relatively new compared to the other tools.

For all of these tools, data security, privacy, and accuracy will continue to be paramount. We’ll continue to see how ChatGPT’s ongoing modernizations, Gemini’s full release, and Bedrock’s updates shape AI modifications and strategies in the new year.

Want to discuss how you can incorporate AI into your business? Feel free to reach out to our team at any time and book a call.